Test Overview

This guide gives an overall view of the test performed before a component, container, product, or system is released.

Intended for

Test engineers, software engineers, hardware engineers, release owners, and produt owners.

Introduction

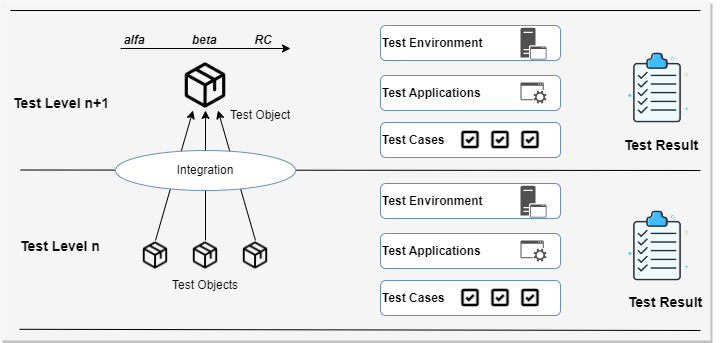

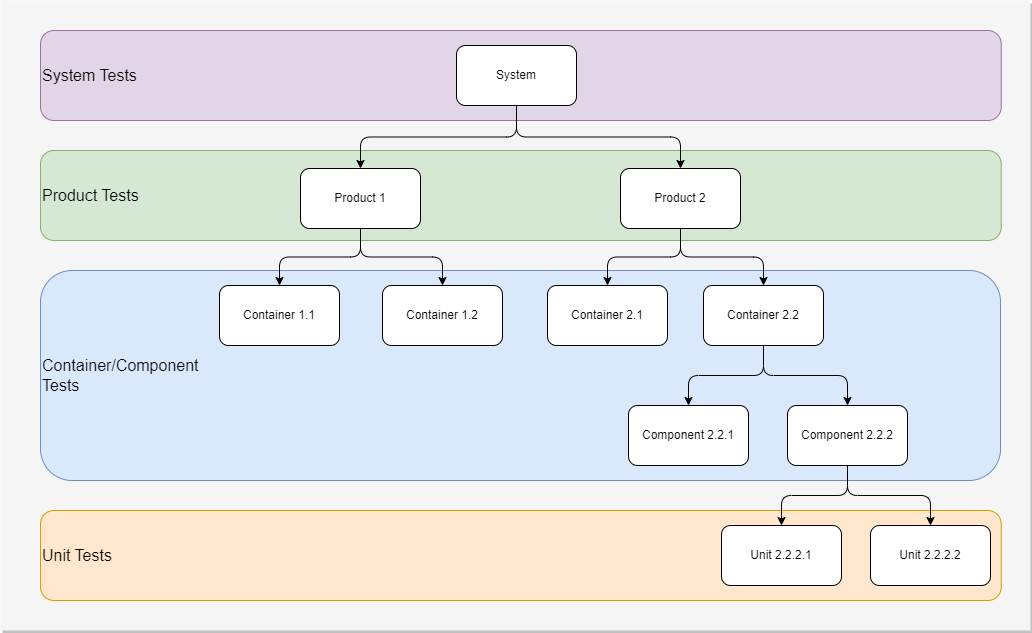

The test objects are integrated from the building blocks (components, containers, and products) described in the architecture. Each integration (test object) represents an executable piece of software and/or hardware that can be tested. E.g., software and hardware components can be integrated into a product that can be tested in a product test environment.

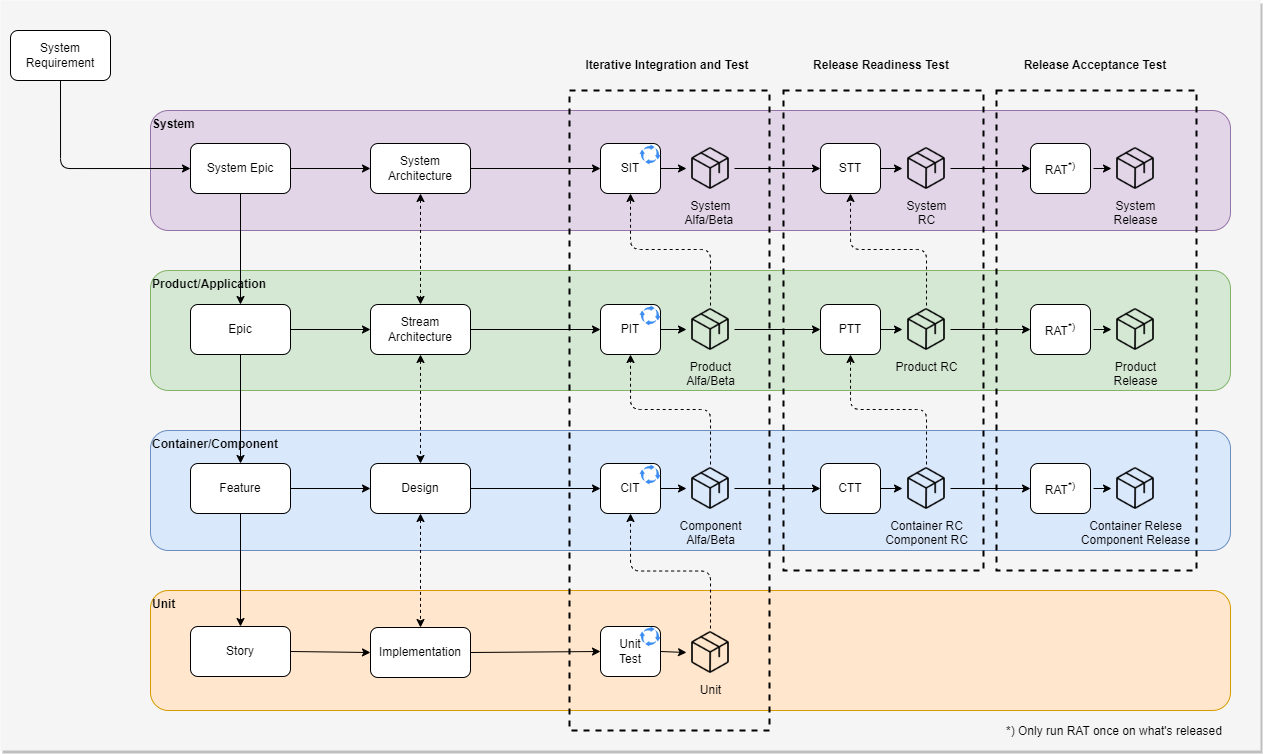

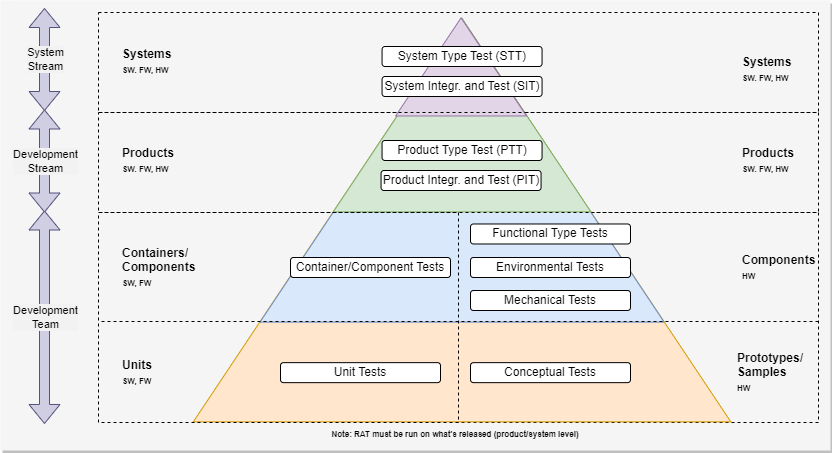

The test levels describe the different test objects (integrations), test environments, and test cases to be executed, manually or automated. There are currently test levels for units, components, containers, products, and systems.

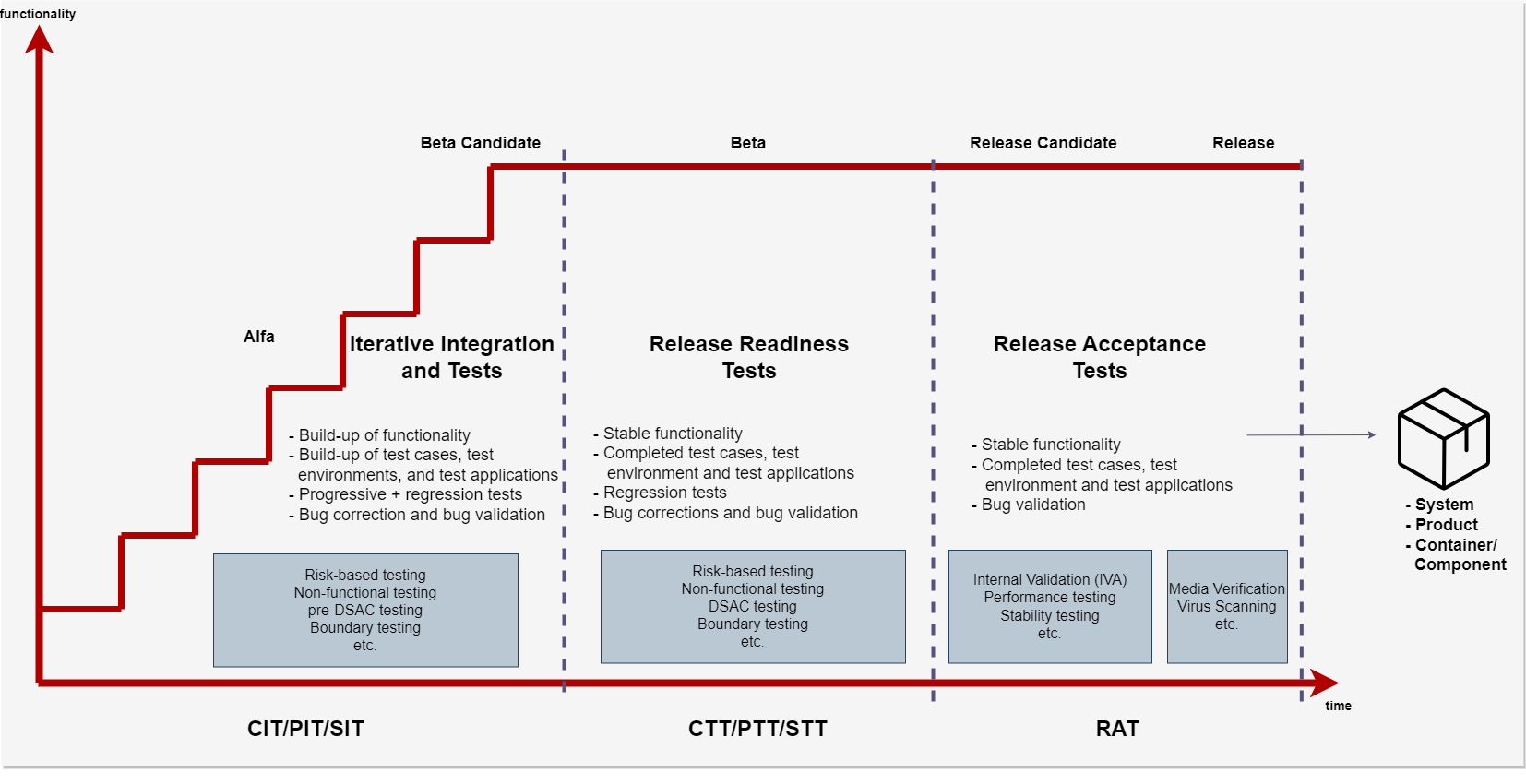

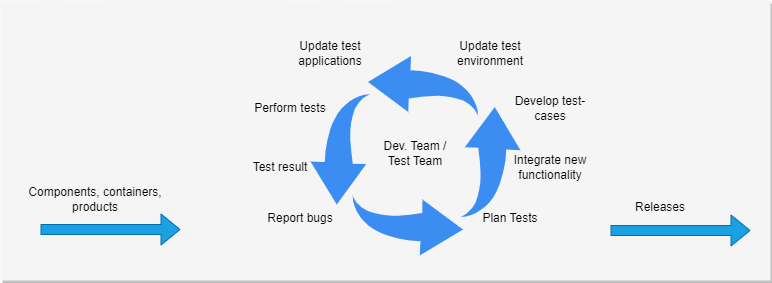

The test stages describe how a component, container, product, or system is built in steps (iterative integration test, release readiness test, and release acceptance test), and how the development of test cases follows the development of added functionality. Testing should be continuously performed, even if the test object has not reached full functionality and is ready for release.

The test objects, test levels, and test stages must consider how often releases are done (e.g., frequent releases require a higher degree of test automation), standards (security, safety, ISO, explosion protection, etc.), minor or major changes, and the maturity of the test object.

Note: Shift-left testing means pushing testing towards early stages of software development. The goal is to not find any critical bugs during product type test (PTT) / system type test (STT) and release acceptance test (RAT).

Test objects and architecture

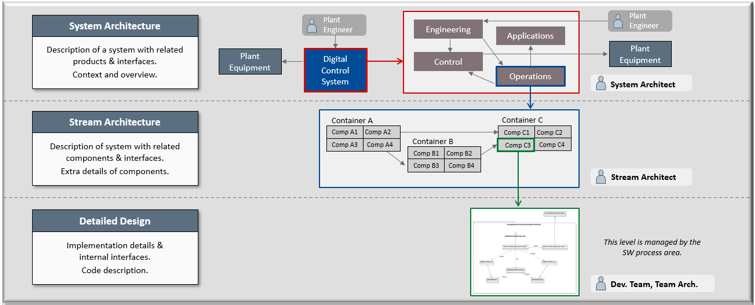

The architecture describes the building blocks (components, containers, products, and systems). These building blocks are defined by the system and stream architecture and the design. Each building block has interfaces and APIs to be tested.

The building blocks in the architecture and design are developed by software and hardware engineers. The implementation can be done in steps to reach the required functionality. The test engineers need to understand the type of building blocks to understand what test level it belongs to (e.g., what types of tests should be applied), and the stages the test object transitions through.

It is important to understand what types of tests can be performed at each integration level. It often happens you wait for later integrations to perform tests that could have been executed earlier. The tests need to be executed at the lowest possible level if you want to do a "shift-left" from a test perspective ("shift-left" is more than just testing). Also, if you have performed a test on one test level, you should not need to repeat it on higher test levels.

From the architecture, we can derive the different integration and test levels. See the C4 model in Architecture Document Structure for more information.

Requirements, test levels, and releases

Generally, system epics, epics, and features represent potentially releasable objects. They must be broken down further for the development teams into stories and tasks. Also, the epics and features must include the work to create test environments, test applications, and test cases needed for the release.

The stories represent implementations, and they are given to the software, hardware, and test engineers to develop. Low-level tests are performed early in combination with code reviews and static code analysis.

Epics and features are potentially releasable

The system epics and epics are larger development initiatives that can be tested and are potentially releasable. A final system or product release often consists of a set of completed epics. The system epics and epics are closed after all children are closed, and a last rerun of all the related test cases has passed successfully. The system and product tests include testing of non-functional requirements (e.g., performance, capacity, reliability).

Epics and features make it possible to develop new software and hardware stepwise. They are integrated into executable test objects (systems, products, containers/components), together with test cases, test environments, and test applications used in the test execution.

Each completed epic or feature causes a rerun of all related test cases (regression). The previously developed functionality and test cases should not be broken by the new additions. Test cases are preferably automatic and repeatable, but tests not performed very often can be manual.

Stories represent implementations

The user stories represent detailed development work and are a stepwise development of new functionality. The stories result in units, which are the smallest part of the design that can be tested. The additions are not allowed to break earlier implementations, and regression tests need to be run even on the lowest level.

Since the stories are a breakdown of features, the units (white-box tested) are later integrated into larger components (black-box tested).

Test stages

The test stages are the stages that new or changed functionality must pass before it is released. It consists of a "build-up" stage where new and changed functionality is tested. A "readiness" stage where the functionality is stabilized, and a final "release" stage to accept scope and quality.

The test stages are similar for each test level and work for both agile and traditional development. The new and changed functionality must pass the test stages before it is released. The frequency of the releases can differ - in agile, moving through the test stages is quicker.

Iterative integration and test (CIT/PIT/SIT)

- CIT: Component integration and test.

- PIT: Product Integration and test.

- SIT: System integration and test.

This stage iteratively builds up the test cases, test environment, and test applications. In each iteration - regression tests are performed on previously developed functions, and new tests are developed for the new functions. Test automation is necessary, if the tests are often repeated, and fast feedback on issues is needed. The test result is immediately given as feedback to the developers to take corrective actions.

In lean-agile development, the components, containers, products, and systems are developed in time-boxed increments and iterations and must carefully consider the tests to be executed.

The test development is therefore closely synchronized and aligned with the software and hardware development. Tests are developed and executed in every iteration as the functionality is built up. If tests are successfully executed on a test level, both regressive (existing) and progressive (new) tests, the functionality can be used on a higher test level for further integrations and tests.

Note: With iterative development, continuous test runs are necessary to ensure that the software is functioning properly. That's why test automation is often the preferred method, especially at lower levels. Of course, there are cases where manual testing is necessary - for example, when automation is not possible, or when the tests are not repeated very often.

Release readiness test (CTT/PTT/STT)

- CTT: Component type test.

- PTT: Product type test.

- STT: System type test.

The release readiness test is a final run when the expected release scope is in beta status. During this stage, it is not allowed to add new functionality (or at least not without a change request with an impact analysis to be approved). If the earlier stages were successful, and the bugs have been found early and corrected - ideally only one run through all the test cases is needed with the same test cases developed in the previous stage.

This stage focuses on ensuring the release is stable and reliable, and that there are no high and critical bugs left.

Release acceptance test (RAT)

RAT is the last testing performed to assess whether the final integration of software and hardware is ready for release and delivery. RAT should be performed on any type of release (component, container, product, or system). The purpose is to check if the core system is intact and not influenced by the late changes in the software or hardware. All deliverables must be in release candidate (RC) status before the RAT can start.

The result from the type tests in the earlier stage helps to understand if the deliverable/release fulfills the users' needs. Getting direct feedback from customers is crucial for success.

The system/product/component test environments and applications are already prepared and correctly configured before the RAT starts. Any changes of any software (patches etc.) or hardware should be avoided. The release object dedicated to endurance is run continuously for a pre-defined duration without any software change and disturbance at normal load. The testers shall use user's manuals for test activities in this stage.

If a severe problem is found during the RAT and this requires a correction, a decision meeting is required involving the head of quality to decide whether the RAT should restart or continue.

Note: Only one of the RATs is performed on the test level where the component/container/product/system is released from.

Test levels

The test levels are aligned with the architecture and the building blocks in the architecture.

System tests

The system tests focus on testing system epics and their acceptance criteria, The focus is to test functionality across products (e.g., "alarm & event" needs to include at least a controller and an operator workplace to be able to generate alarms and visualize them in an alarm list).

The system test also focuses on non-functional requirements to make sure the entire system fulfills the expectations, e.g., performance, endurance, and scalability.

A system release consists of many products integrated into a test environment. A system stream coordinates deliverables from development streams, to make sure a system can always be integrated and tested.

For further details, see the System Test guide.

Note: System tests belong to the "test" process area.

Product tests

The product test focuses on testing epics and it's acceptance criteria. The focus is on the product to be delivered, and not the surrounding products (they are supporting the tests by e.g., generating data). Epics allocated to a development stream are the main input for the product test to verify.

Non-functional requirements can be tested in product tests but with a focus on the product. E.g., display exchange times can be measured for the graphic parts.

A product can be a standalone product delivered by a development stream. This means a system-level test does not need to be performed.

For further details, see the Product Test guide.

Note: Product tests belong to the "test" process area.

Container/component tests

Containers represent an application or data storage. Other parts of the system are dependent on the container to be able to run. The container can be used to test a set of components.

The component tests focus on features and their acceptance criteria. Components are building blocks for containers. To test a single component, you may need to provide additional code (mock-up), build scripts, and test code to create an executable and a test environment.

Note: Container/component tests belong to the Software Development process area. Component tests for hardware (environmental tests, mechanical tests, etc.) belong to the Hardware Development process area.

Unit tests

Unit testing is the first level of testing and is often performed by the developers themselves. It is the process of ensuring individual units of a piece of software at the code level are functional and work as they were designed to. Developers in a test-driven environment will typically write and run the tests before the software or feature is passed over to the test team.

Unit testing will also make debugging easier because finding issues earlier means they take less time to fix than if they were discovered later in the testing process.

Note: Unit tests belong to the Software Development process area.

Test strategy

When planning a release project, it's important to have a well-defined "Test Strategy and Plan" document in place. This test strategy should outline the different levels of testing that will be performed, as well as the specific testing methods that will be used within each level.

Its primary use is to adapt the test process to the release context. This helps ensure that the test process is efficient and effective, resulting in a high-quality release. By carefully planning and executing the test process, developers can ensure that their software is reliable, stable, and ready for release.

Some standards (e.g., Security IEC 62443) demand independent tests. If required, make sure to describe in the test strategy document how to achieve independence for a test level when tests are performed by the development teams.

The test strategy should consider the following test levels for system and product development.

The test strategy can be defined on the system or development stream level for a product line if it doesn't change too much between releases.

The test strategy document should contain the following chapters:

- Release type and context

Explain the type of project (rollup, product/system release) and the specific release context impacting tests (e.g., new development, bugs, integration from suppliers) - Test levels

Explain the tailoring of test levels, which could be unit, component, container, product, or system testing. - Scope

List the areas to test. Also, mention anything excluded from the scope or items not being tested. - Entry and exit conditions

Explain the tailoring of entry and exit criteria. - Environment

Describe where the testing takes place. Is it in a development, test, staging, or production environment? If there is a database involved, towards which environment is it pointed? Make sure to list any URLs. - Team responsibilities

Describe who is responsible for performing each task. The description may include people from outside the testing team. For example, developers may be responsible for unit testing, or stakeholders may be responsible for acceptance testing. A tester may execute scripts, and a test manager may be accountable for reporting. - Testing tools

This section should define any tools needed for testing and test case management. - Techniques

Explain conditions and test case defining. Is test case data creation needed? If so, explain what is required and when. - Bug management Explain the tailoring of the bug management process (e.g., communication of bugs to customers)

- Release control

Define who will be responsible for the release. Will it be in iterations or continuous integration and delivery (CI/CD)? What approvals do you need before the release? If there is a review board process, include that information because it can affect the release's timing. - Metrics and reporting

Describe the method for recording metrics and who is responsible for the reporting. You may need input from a product manager on acceptable values for each metric. - Risk and mitigation

You may already be aware of threats before testing. If possible, describe ways to mitigate risk. - Summary

Include a basic overview of the testing and its work products.

Test responsibility and accountability

The test levels must have assigned responsibility and accountability. A poorly executed test level can have a big impact on test levels later in the chain.

- Unit tests

The development teams prepare and perform the unit tests. The team also decides what tests are needed in the regression suite to avoid regression bugs. - Component/container tests

The development teams integrate the components develop tests, set up suitable test environments, and develop test applications. - Product tests

The product tests are the stream's responsibility and can be performed by the development teams. In some cases when independent tests are required, a separate product test team may be required to take the responsibility of the tests. - System tests

The system test teams are independent of the development streams and are responsible for the tests.

The product owners are accountable for the quality of the deliverables and approve the test result before the epics and features are closed.

Q&A

Who is responsible for executing tests?

Usually,

- CIT/CTT is performed by the development teams.

- PIT/PTT is performed by the development teams, or a separate product test team (see earlier note on independence).

- SIT/STT is performed by the system teams.

Flexibility to move test cases depending on capacity, test environment, and/or test applications should be available on product and system test levels. However, the accountability of the test result must always reside with the stream that owns the test level. For instance, if selected product tests are executed on the system level due to resource issues, it is the development stream that is accountable for the test result.

How are capabilities tested?

The capabilities follow the lifecycle of a product and its components, serving as a record of what has been implemented from the initial release onward.

The development team updates the capabilities of the product and its components to keep track of what has been implemented in each release. This ensures that the product is always up-to-date and able to meet the needs of its users.

Remember that capabilities never "die", so any updates to them will require an updated test case to ensure proper functionality.