Product Test Overview

The product test verifies that the product to be released has acceptable quality. It applies to new products and maintenance/updates of existing products.

The objectives of the product test are to ensure that:

- The epics are fulfilled.

- The product has the functionality specified for our stakeholders.

- The product does not include undesired functions and unwanted or unexpected effects.

- The usability is good.

For a description of test stages and levels, see Test Overview.

Intended for

Test engineers, software engineers, hardware engineers, release owners, and product owners.

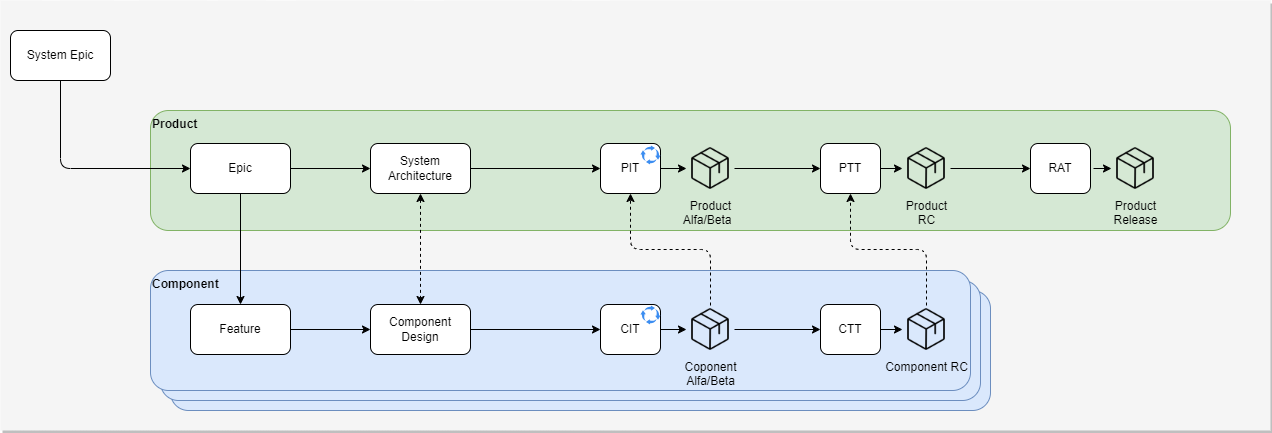

System epics, deliverables, and releases

Product owners refine system epics into epics. Together with the development teams, features are defined to drive the development. This results in software and hardware deliveries of integrated and tested components. (See How-to Work with Epics for details.)

The product test engineers develop the test cases using the information in the epics, mainly the acceptance criteria. If any issues are found during testing, they are reported as bugs for further investigation and corrections.

If an epic is implemented in just one component, product integration and test (PIT) is typically covered by the component level.

If an epic's features are vertical slices (implementing end-to-end functionality) and independent, PIT is typically done as part of the feature.

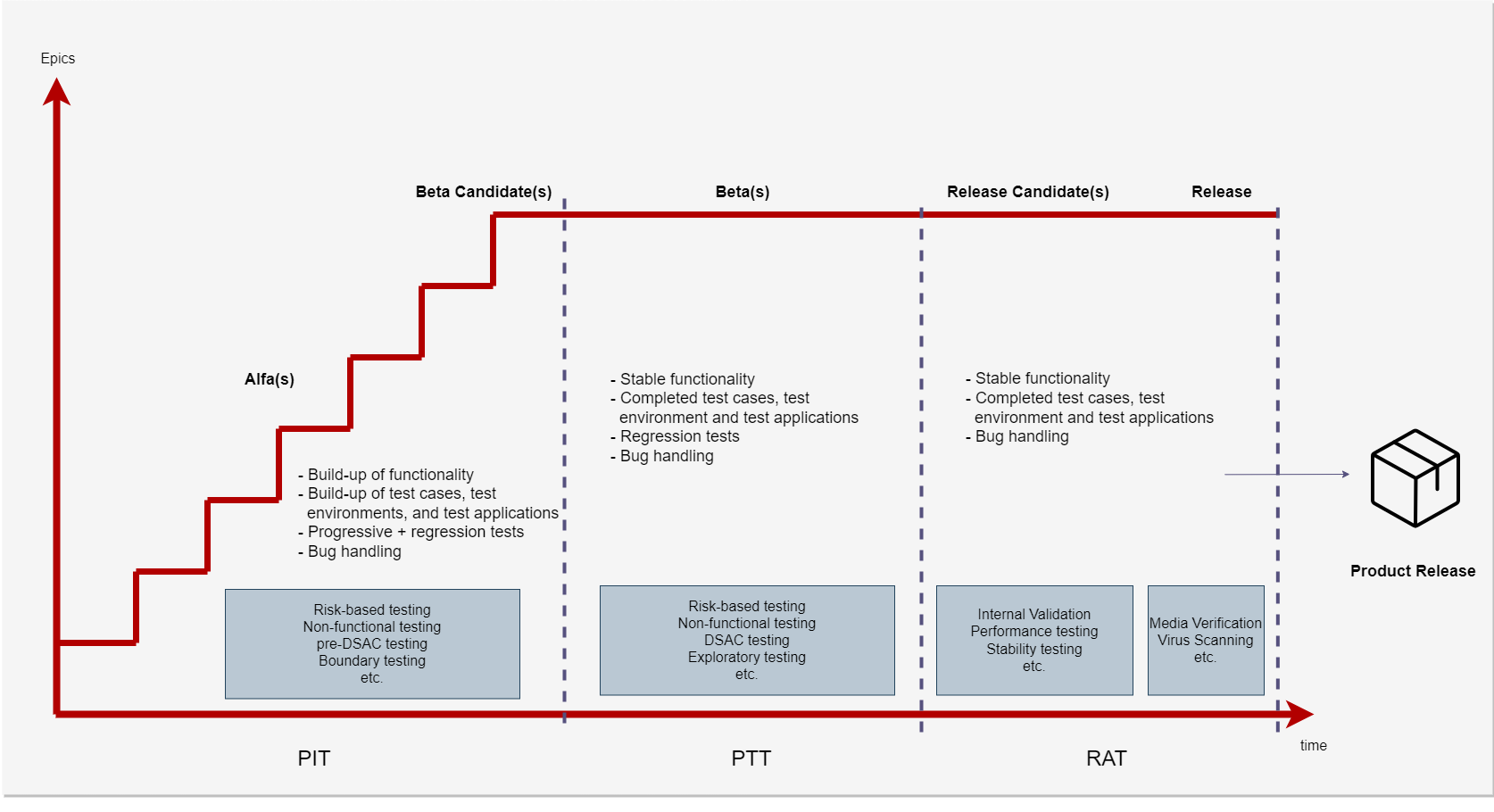

Product test stages

The product test process supports agile development and is aligned with increments and iterations. After passing the component tests, development teams' deliverables can be integrated into product test environments. Product test cases can be iteratively developed depending on the added functionality in the deliverables.

Product test activities

Introduction

Product test consists of

- Planning and set up

- Product integration and test (PIT)

- Product type test (PTT)

- Release acceptance test (RAT)

Planning and set up

During the initial test planning, the test lead writes the project test strategy in the "Test Strategy and Plan" document, which covers all test activities, including product tests.

The test strategy clarifies the test coverage, tests on new and changed functionality, and regression tests on the old functionality required on each test level. It shall also explain who should perform the product test (development teams or a separate product test team), the test environment, test tools, and potential tailoring/details to this test process.

For each epic, iterative test planning is needed to identify test cases for new and changed functionality and select required regression tests to ensure nothing has been broken in the build. Also, the activities for the test environment and test application updates must be planned, which is not a negligible effort.

PIT shall be managed as a feature in the development epics.

PTT shall be a separate enabler epic with the release. The PTT epic shall be refined into features for the different parts, such as automated tests, manual tests, exploratory tests, vulnerability, and robustness testing (DSAC), etc.

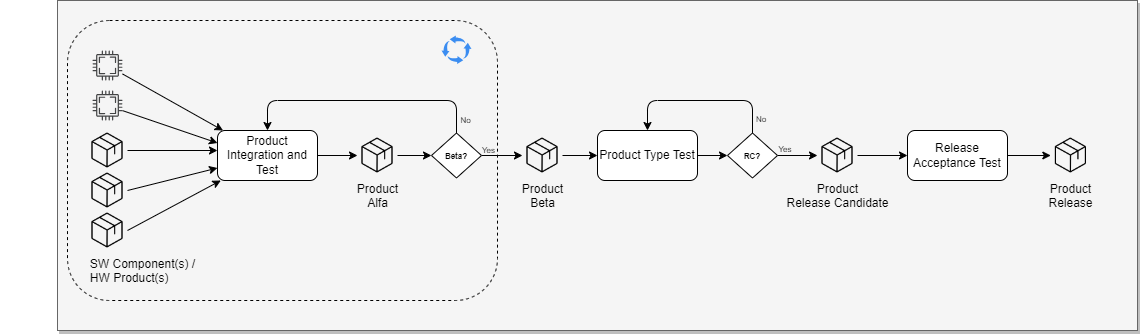

Product integration and test

The PIT continuously integrates software components and hardware products into executable test objects. The test cases, test environments, and test applications are also built stepwise to verify the epics. The goal is to automate as much of PIT as possible since the tests will be repeated many times.

Input to PIT is software components and hardware products.

Entry criteria for PIT is that the epic's definition of ready (DoR) has been completed.

Activities for PIT include:

- Monitoring of software/hardware status.

- Build and integrate with related tests (smoke tests to secure successful build).

- Development of product test cases. The basis for writing the test cases is the acceptance criteria in the epic.

Ensure Gherkin syntax is used in the epic acceptance criteria since it will simplify the development of test cases:

Given [initial context] When [event] Then [expected outcome.]

The types of product test cases include:

- Integration tests: To verify interfaces and interaction between the components.

- Product tests: To verify the acceptance criteria of the epics.

- Benchmark test: To verify consistent behavior with legacy products.

- Performance tests: To verify response times and load.

- Interoperability: To verify that products work with other products.

- Review and approval: Of the test cases.

- Execution of test cases: Executed manually and then added to the test pipeline.****

Bugs found during PIT are reported to the development teams for correction. When an epic has been successfully tested, and the definition of done (DoD) criteria is met, it is set to "Closed." PIT continues until all epics have been closed.

Exit criteria:

- All epics have been set to "Closed".

Output of product integration test is an integrated product of beta status and reported bugs.

Product type test

The purpose of the PTT is to ensure release readiness. During this stage, it is not allowed to add new functionality (or at least not without a change request with an impact analysis to be approved). If the earlier stages were successful, and the bugs were found early and corrected, ideally, only one run-through of all the test cases would be needed, with the same test cases developed in PIT. One difference between PIT and PTT is that PTT requires more documentation; a test log is saved, and a test report is written.

Input to PTT is the fully integrated product (software and hardware parts) and the approved test cases from PIT.

Entry criteria:

- All epics/features closed.

- Beta criteria met.

Activities for PTT include:

- Execution of product test cases (automatic and manual).

- Exploratory test with feedback from stakeholders with extensive product experience.

The focus is on system behavior triggered by change, like reconfiguration, operator changing values, power failure, and network failure. - Pre-DSAC (Achilles and Nessus). If possible, to automate, this can be moved to PIT.

- Order DSAC.

- Reporting in a test report.

Exit criteria:

- Release candidate established.

- Test report approved.

Output of PTT is:

- Test results in ADO.

- Test report.

- Reported bugs.

Release acceptance test

During the release acceptance test (RAT), a subset of tests is re-executed based on the influence of late changes in the builds. The purpose is to check if the product is intact and not influenced by late changes in software. If a severe problem is found during RAT and this requires correction, a decision meeting with the head of quality is required to decide whether the RAT should restart or continue.

Input to RAT is the release candidate.

Entry criteria for RAT is that the "Start of RAT checklist" is fulfilled.

Activities for RAT include:

- The user manuals for test activities were reviewed in this phase.

- During RAT, the regression of the following tests is mandatory:

- Greenfield installation and configuration.

- Smoke tests.

- Import and export.

- Backup and restore.

- Endurance tests.

Exit criteria for RAT is that all the tests have been successfully performed.

Output of RAT is that the product is ready for release.

Product test environment

During the product test, the product is placed in a real environment or has an application simulating real conditions created by user applications. Most functions run in the system and create a base load. In addition, the PIT/PTT tests are performed.

Make sure all tests are repeatable and reliable. Document necessary information for hardware and software configurations, auxiliary software and equipment, and equipment under test (EUT) used during the test. In other words, a test environment provides the necessary setup to run your test cases. The product test environment must be managed from the beginning, and the configuration of the test object, test tools, and hardware shall be documented in the test strategy and plan document.

Test result

The result of each test case shall be documented in Azure Test Plans or a test record. For example, in agile testing, all test results are archived in Azure Test Plans together with the test case/description and defined configuration.

Usually, it is recommended to create one test plan for creating/maintaining test cases (no test execution, could be reused for different releases) and another test plan for execution for each product release named with project/product name with version, for example MOM2006\_Dev\_TestPlan/FreelanceV12.1\_Dev\_TestPlan. For more details, see How-to Create Test Plans and Test Suites.

Suppose a team chooses to use MS Word to document test results. Make sure all tests can be repeated and reliable, and record all test results, test tools used, test environment set-up, test artifacts' versions, and remarks to track and solve failed cases.

The result shall be one of the following selections:

- Passed.

- Failed.

- Blocked.

- Not Applicable.

Product test deliverables

Product tests consist of the following work products:

- Product test case: Manual or automatic.

- Product test result: Each test run generates a test result. The test results are not reviewed or approved during PIT but must be reviewed and approved in PTT.

- Product test report: The product test report documents the outcome of the product test, including tool versions, test plans used, test cases, test scenarios, metrics of requirement coverage, test case results, open bugs, and test conclusions. For details, see the product test report template.

- Bug: When expected functions are not realized or unwanted effects are identified, a bug shall be filed; see below.

Bug management

Bugs shall be handled according to How-to Manage Bugs.

The general rule is that bugs found on non-completed features are treated as development tasks, while bugs found on completed features are handled as bugs.

Product test traceability

The traceability of product test work is as follows:

- Epic/product capabilities -> product test case.

- Product test case -> product test result.

- Bug -> product test case (if relevant).

- Product test report -> epics, PT cases, PT results, and remaining bugs.

Product test tools

The following tools are typical tools to be used or technical areas to manage. The tools used shall be described in the test strategy and plan document.

- Azure DevOps (ADO) test plan module: The test plan module structures the tests and presents test case results, visualized using ADO dashboards.

- Virtual machines: To run simulated versions of the product, pipelines with tests must be able to run independently of available hardware.

- Containerization: By containerizing different parts of the product, e.g., the controller, it will be possible to simulate systems with multiple controllers and attach these to a simulated network to communicate between containers. Another approach can be containerizing the localhost testing environment to create various testing instances.

- SpecFlow: It is a behavior-driven development (BDD) framework for .NET. It auto-translates the steps written in feature files to C# code. This tool allows the developer to spend more time implementing product-specific operations and step logic than debugging and explaining code. Compared to XML test case descriptions, BDD steps help bridge the gap between developers, testers, and other stakeholders.

- ELK stack: Data repository. An agent (Filebeat) running on the environment collects all logs with additional metadata and sends them to the data repository, ELK stack. Here, it is easier to search and filter out relevant logs and data and visualize the information collected from pipeline runs and virtual machines.

- Pre-DSAC: Achilles and Nessus are tools that check vulnerability and robustness before formal DSAC is run.