How-to Perform Configuration Audits

The purpose of configuration auditing is to objectively assess the integrity of a product, both from a functional and physical perspective.

Configuration audits are typically classified as functional, physical, or baseline configuration audits, and they are planned, executed, and visualized in Azure DevOps (ADO) using:

- Work items: planned audit activities, audit findings, corrective actions.

- Queries: find data for configuration audits.

- Dashboards: release criteria, traceability issues, data quality.

- Wiki: summary of audits and related actions.

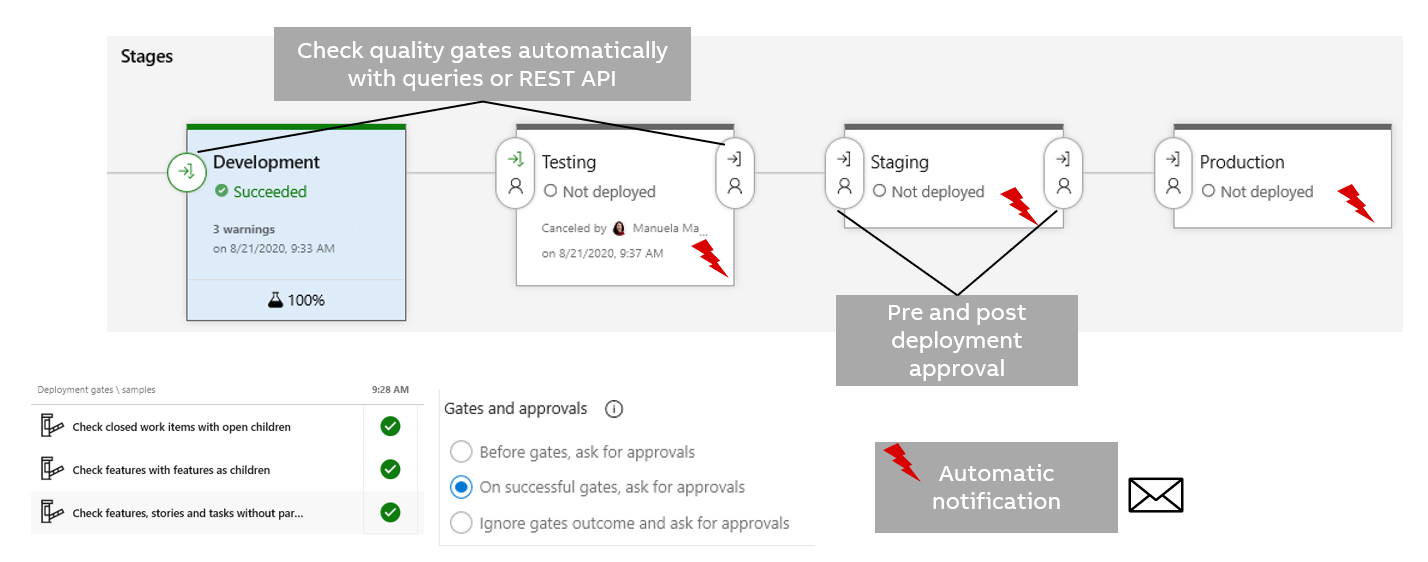

- Build and release pipelines: quality gates, approvals, tools/scripts.

As the conceptual guide Configuration Audits mentions, a large part of a configuration audit can be performed automatically or integrated with ADO.

This guide describes the activities to prepare for, execute, and monitor configuration audits. It also includes descriptions of related queries, stages, and quality gates under Details.

Note: Although this guide contains several examples, the actual checklists and set of queries, criteria, release stages, and roles are decided for each project and documented by the configuration manager, e.g. in the configuration management (CM) plan or on a wiki.

Intended for

- Configuration managers who implement and execute configuration audits.

- Release owners, scrum masters, product owners, or quality control managers who need to visualize configuration audits.

Activities

Prepare for the audit

Define audit checklist

The configuration manager of a project or team defines, updates, and documents the checklist used during configuration audit sessions. The checklist has to be clear and easy to understand for the people involved in the audit, it cannot be too generic or abstract or refer to processes that do not apply to the context.

Example of checklist:

| Questions | Yes / No / Not Applicable | Remarks |

|---|---|---|

| Traceability | ||

| Are traceability issues checked by queries and dashboards or release pipelines? Have these been reviewed and issues fixed? | ||

| Are the test cases and test plans checked by queries and dashboards or release pipelines to detect inconsistent states (e.g. executing tests that are not ready)? Have these been reviewed and issues fixed? | ||

| Configuration management way of working | ||

| Are CM activities tracked as work items in ADO, planned, tracked, and validated before closing them? For example, setting repo policies, changing pipelines, integrating DevOps tools, etc. | ||

| Code | ||

| If using git: Have all the code repositories been configured according to the current guidelines or approved tailoring (repo name, branches, policies)? See DevOps orientation slides from slide 90 “Version Control – Branching Strategy” to slide 106 “Permissions” | ||

| If using Team Foundation Version Control (TFVC): Are the check-in policies configured with mandatory comment and work item association? | ||

| If using TFVC: Are code reviews performed on all changes? This can be checked with a tool or manually. | ||

| If using TFVC: Is a script integrated into the release pipeline to verify that all checkins have a link to a work item? Were all exceptions verified, if any? | ||

| Are there any non-personal users allowed to do code changes? | ||

| Pipelines | ||

| Are unit tests executed in the build pipelines? | ||

| Is the release process managed with build and release pipelines, without any manual procedures to produce the deliverables after the process is started? | ||

| Is automatic versioning of deliverables configured in all build pipelines? | ||

| Is automatic baselining created at all applicable levels (component/product/system level)? | ||

| Is the retention of builds and releases defined and applied to avoid losing baselines? | ||

| Is at least one static code analysis tool integrated into the build pipeline used for each repo? E.g. SonarQube or Klocwork. | ||

| Is the static code analysis tool integrated so that the pipelines or pull requests fail based on the results of the analysis? | ||

| If not, were the results reviewed and the issues fixed? | ||

| Are quality gates and approvals defined and configured in a release pipeline for release criteria and functional configuration audit (FCA) / physical configuration audit (PCA)? | ||

| If not, are FCA/PCA planned in time for G4, including this manual checklist, plus the items that are supposed to be checked automatically? | ||

| When applicable: was the "Final media" tested to check that it conforms to the planned release and contains all planned items? Including documentation, structure of delivery, etc. See FCA/PCA process guidelines. | ||

| Baselines | ||

| Is any manual baselining of configuration items necessary and is it complete? E.g. work items committed at G2 or PI planning, documentation not stored under source control and included in the baselining done by pipelines… | ||

| Misc. | ||

| Have 3-rd party licensing requirements been met? | ||

| Archiving: was archiving completed as planned? E.g. artifacts, documents, ... |

Define audit automation

When first implementing this process, at the program increment (PI) planning or the sprint planning, create work items to implement the automatic part of the configuration audits following this guideline.

When the process is established, plan updates as needed, for example when new components are developed or integrated, or when roles change.

Plan audit sessions

When planning each PI, create work items to execute configuration audit sessions.

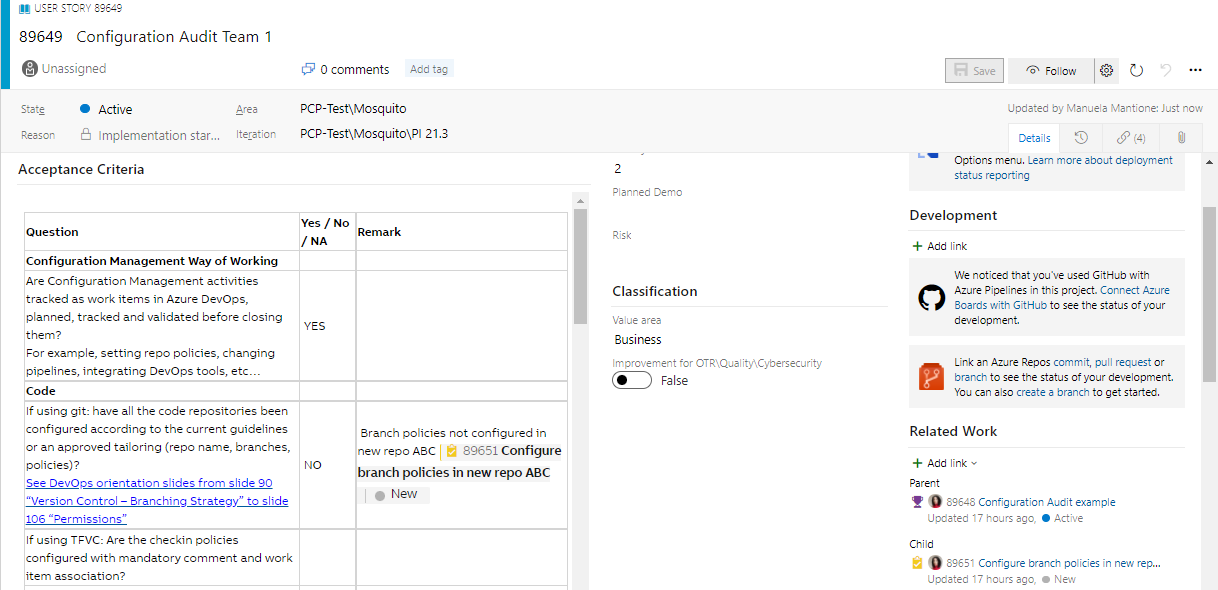

For example, in a project with multiple teams, create one feature for the configuration audit of each PI and one child user story for each team. The user story contains a checklist.

If desired, the set of work items and the checklist can be subdivided differently, for example having more stories with a smaller scope or with smaller sections of the checklist assigned to different people.

The scope of the configuration audit and possibly any special focus areas are specified in the "Description" field.

Once the work item structure is decided, using an Excel template can speed up the work items' creation.

It is recommended to plan the audit session one or two sprints before the end of the PI, to have some available time to close actions by the end of the PI.

It is a good habit to involve someone from a different team in an audit session.

Execute the audits

When executing a configuration audit session, participants write their comments on the checklist of the user story.

Any major actions to close gaps or solve issues are written in the comments linked as children's tasks and submitted to the respective team. If the action is very quick and can be done during the audit, a comment is sufficient.

The configuration audit user story will be closed when all children's tasks are closed.

The configuration audit feature will be closed when all children's stories are closed.

- Example:

- Feature (Sprint 6)

- User Story Team 1 (Sprint 4)

- Action 1 (Sprint 4)

- Action 2 (Sprint 6)

- User Story Team 2 (Sprint 5)

- Action 3 (Sprint 5)

- Action 4 (Sprint 6)

- User Story Team 3 (Sprint 6)

- Action 5 (Oh, no! We have no time in this PI, we cannot close the story and the feature!)

- User Story Team 1 (Sprint 4)

- Feature (Sprint 6)

Monitor and close actions

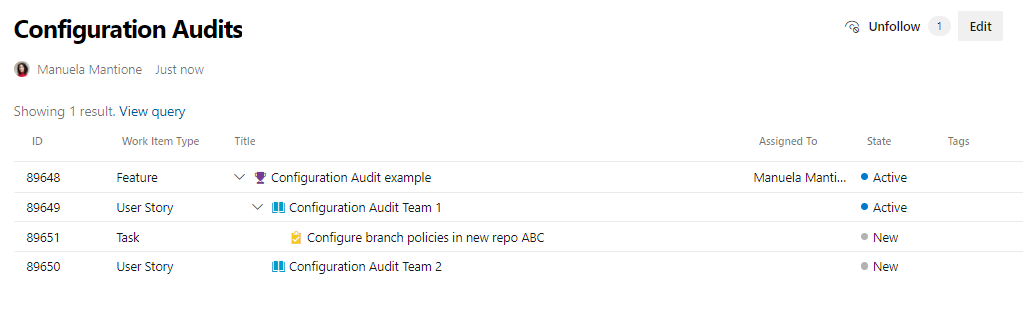

Make a wiki page for efficient monitoring of configuration audits and follow-up of identified actions. Insert the query of configuration audit features with the tree of work items. This can be a reference for anyone who needs to visualize the planned and past configuration audits, for example for quality audits or future configuration audits.

Make a dashboard to visualize the relevant queries listed in the section below, with color coding to help interpret results and detect issues.

Example:

Details

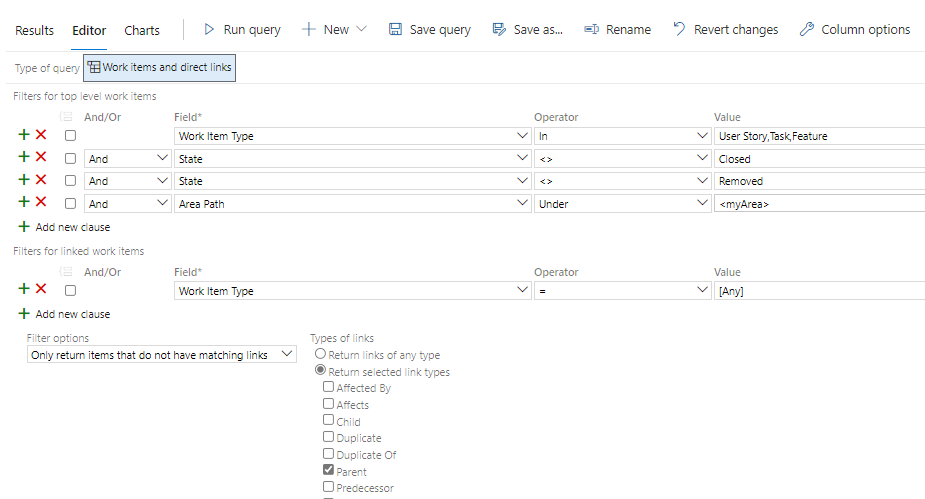

Create queries

Work item queries are a systematic way to find data for configuration audits, visualize it, and make decisions.

Create release criteria queries

Queries about scope, defects, and quality KPIs as required by the release criteria are valid as part of the FCA.

Reuse the existing release criteria queries if they already exist, otherwise, create the missing ones in collaboration with the release owner and quality control manager according to the latest release criteria.

Examples:

- State of planned epics, features, user stories, and bugs.

- State of risks.

- Open regression bugs.

- Open critical/high bugs.

- Open security bugs.

- ...

Create traceability queries

- Closed work items with open children.

- Double levels of work items, e.g. Features with features as children.

- Work items without parents, e.g. features, user stories, and tasks.

- Inconsistent state of test cases and test plans.

- Definition of ready (DoR) or definition of done (DoD) not completed.

- ...

Create process-related queries

The list of queries can differ, based on the project and process requirements and is documented by the configuration manager, e.g. in the CM plan or in a wiki.

- Planned/completed configuration audits.

- Configuration audit features with the tree of work items.

- Planned/completed open source scan and approval.

- Planned/completed export control.

- ...

Examples of stages and quality gates in a release pipeline

Development stage

The latest development version for daily work and experiments.

Sanity checks quality gates (non-blocking):

- Check closed work items with open children.

- Check features with features as children.

- Check features, stories, and tasks without parents.

No approvals.

Testing stage

Environment for testing milestone builds:

- Check closed work items with open children.

- Check features with features as children.

- Check features, stories, and tasks without parents.

- Check work items not approved, or work items planned for different versions.

- Check open regression bugs.

- Check open critical and high bugs.

- Check open priority #1 bugs.

- Check open security bugs.

- Check open OSS scan and approval feature.

- Check open configuration audit user story.

Approval: configuration manager, release owner.

Staging stage

Simulation of the production environment, for testing with realistic data and scenarios before the final approval to release the software:

- Check if epics, features, stories, tasks, and bugs of the current PI are closed.

- Check open regression bugs.

- Check open critical and high bugs.

- Check open priority #1 bugs.

- Check open security bugs.

- Check if the OSS scan and approval feature is complete.

- Check if the manual configuration audit is complete.

- Check open risks.

- Check open regression bugs.

Approval: release owner.

Production stage

Currently, the released version of the application:

- Check if epics, features, stories, tasks, and bugs of the current PI are closed.

- Check open regression bugs.

- Check open critical and high bugs.

- Check open priority #1 bugs.

- Check open security bugs.

- Check open risks.

Approval: release owner.

Add quality gates and approvals to pipelines

Overview:

Note: The stages in this guideline are an example and can differ from the actual stages defined in a project.

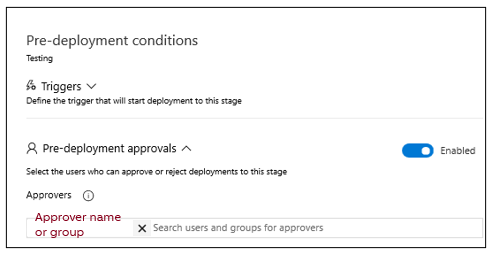

Configure approvals

At each stage, decide if an approver is needed and configure approval, according to the roles and processes in the project.

In classic release pipelines, pre-deployment and post-deployment approvers can be configured, or manual validation can be defined in an agentless job.

Example:

In YAML (YAML Ain't Markup Language) pipelines, manual validation in an agentless job or environment-based authorization can be configured.

Example:

- stage: 'Development'

displayName: 'Development'

dependsOn: Integration

jobs:

- job: 'ApproveDeploymentToDev'

pool: server

steps:

- task: ManualValidation@0

inputs:

notifyUsers: 'xxxxxx'

instructions: 'Approve deployment to Dev Test environment?'

- job: 'DevelopmentTests'

dependsOn: 'ApproveDeploymentToDev'

pool: MyAgentPool

Links to documentation:

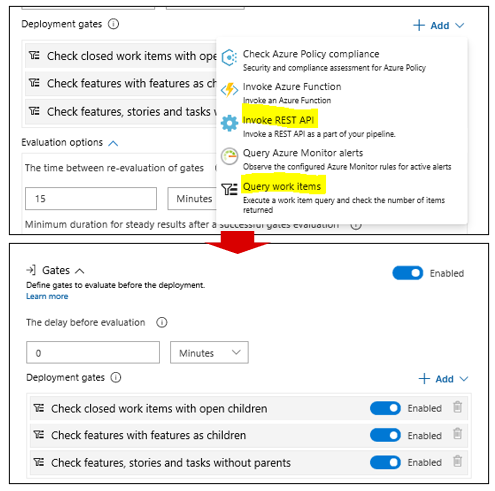

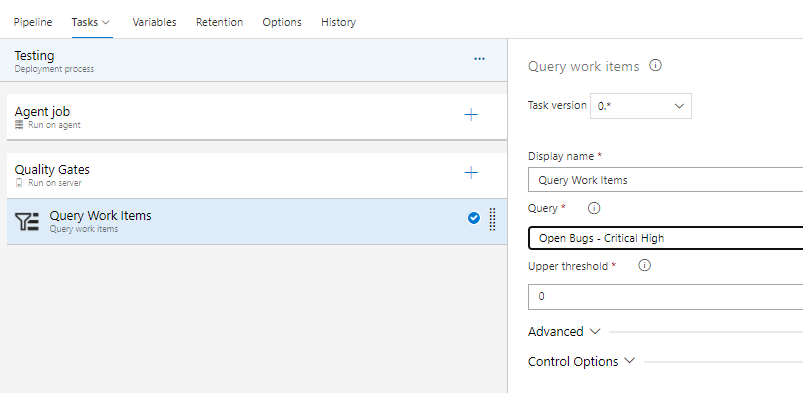

Configure quality gates with queries

For each stage, add queries as quality gates.

In classic release pipelines, configure queries as quality gates.

Example:

If the maximum number of queries is reached, more queries can be added to an agentless job.

In YAML pipelines, "queryWorkItems" tasks in an agentless job can be configured similarly. Link to documentation: